Big Data Layer

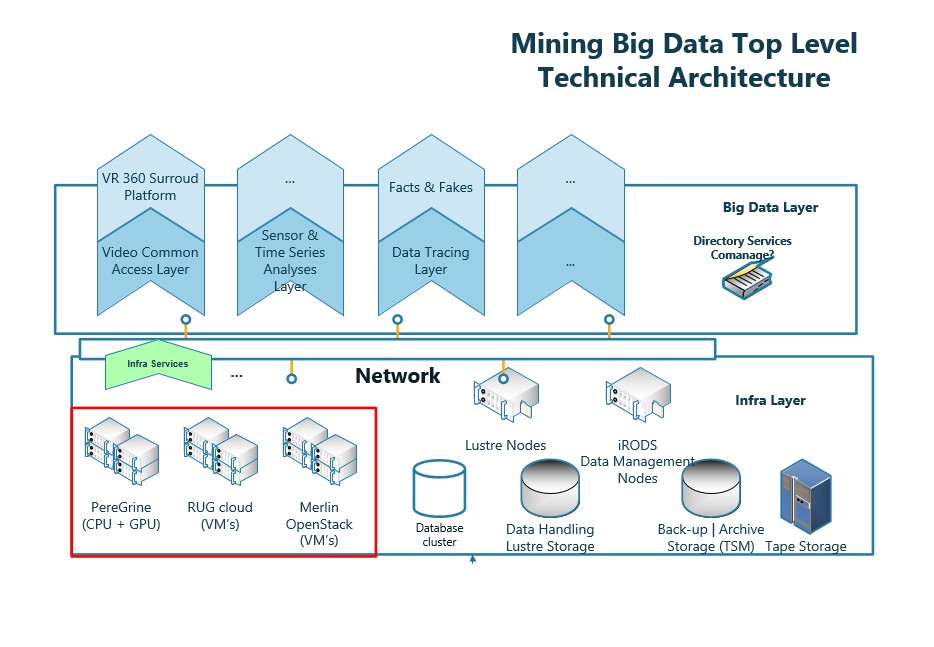

The Target Big Data layer consists of two layers.

The lower level is a common generic layer acting as an abstraction layer over the hardware. On top of this generic layer there are domain specific layers tuned to the specific ways of working (protocols) in the various disciplines. The domain specific layers may themselves be called by application programmes and user interfaces. This is illustrated in the following overview.

The generic big data layer has a mixture of components.

-

Some have been developed in-house, many of which for the earlier Target project

-

Others are open-source systems for which we have expertise

Typical applications will use a sub-set of these components hosted on the appropriate underlying hardware.

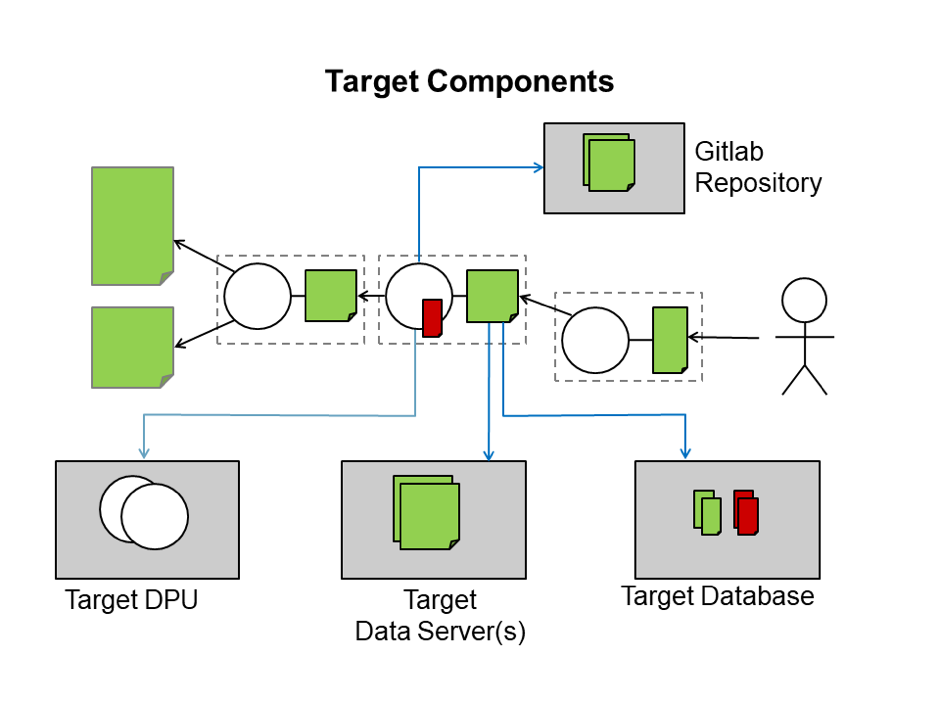

Target Database Component

The database component plays a central role in the generic layer. Typically, all data is stored here except for the bulk data files, which are stored in the WISE Data Servers. The lineage of an object, i.e. how the object was created, is carefully preserved. It is possible to create and objected orientated data model (using e.d. XSD or XML) and the automatically generate the Python code for creating, populating and querying an instance of this datamodel. A range of underlying database technologies can be used (e.g. Oracle, PostgreSQL, Cache).

Target Distributed Data Servers

A Target Data Server provides a method of accessing data on any storage media via a modified form of HyperText Transfer Protocol modification. Simple Put and Get are possible as is access via wget and curl. The system can also deliver only part of a file if this is required. The system is independent of the underlying hardware, so allows a wide range of disparate hardware, file systems and data abstractions layers to be used (see iRODS and dCache below).

Data-Servers can be grouped to provide distributed systems. To improve performance, data can be present on more than one data-server. Extra data servers can be added on the fly.

Target Distributed Processing Unit (DPU)

The basic unit for processing is the DPU which provides a front end to a compute cluster. Jobs can be submitted via this interface without having a detailed knowledge of grid computer methods. The DPUs support parallel and sequential jobs plus job synchronization.

A DPU can currently be implemented on any of the following types of clusters:

-

openpbs managed clusters, such as the UG High Performance Cluster (HPC)

-

glite managed clusters (EGEE or Enabling Grids for E-sciencE)

-

JABOM (Just A Bunch of Machines)

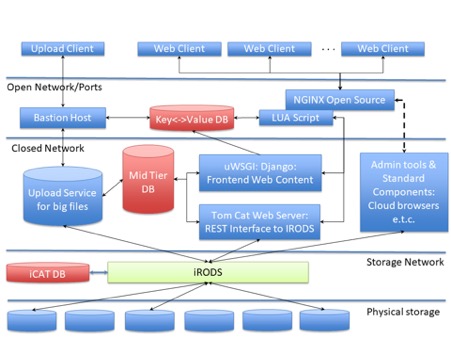

RUG Research Data Management System

For systems which do not need the full power of Target database and storage components described above, another option is the RUG Research Data Management System. Developed to aid researchers in the University of Groningen, it uses the iRODS system to store both data and its associated metadata (see below).

iRODS

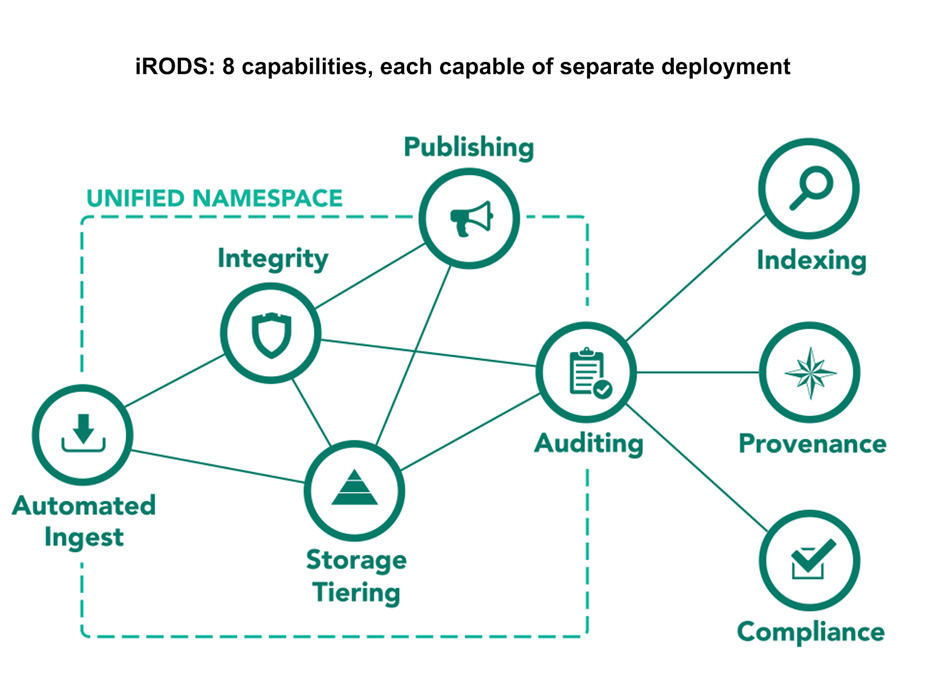

The Integrated Rule-Oriented Data System (iRODS) is open source data management software used by research organizations and government agencies worldwide. It supports data storage and workflow. Metadata is limited to key value pairs, unlike the Target DataBase Component, which supports complex object orientated models. Data can be migrated between hardware platforms according to pre-defined policies. iRODS storage can be integrated into the Target Dataservers.

dCache

dCache provides a system for storing and retrieving huge amounts of data, distributed among a large number of heterogenous server nodes, under a single virtual filesystem tree. Developed by the High Energy Community it provides a high level of performance. In the Target Field Lab it is intended to use this for tape storage, but this is still in development and other uses may be considered. As for iRODS, it will be possible to integrate dCache storage into the Target Dataservers.