Exploring big data in astronomy: an interview with Gijs Verdoes Kleijn and Rees Williams

In the field of astronomy, the collection and analysis of vast data sets have become crucial for discovering the secrets of the universe. Gijs Verdoes Kleijn and Rees Williams are active in the field of data intensive astronomy, each bringing a wealth of experience and expertise. In this interview, their combined knowledge provides invaluable insights into the challenges and innovations in managing astronomical data.

Gijs Verdoes Kleijn is a big data astronomer at the Kapteyn Astronomical Institute, which houses the OmegaCEN Astronomical Science Data Center, and is known for his work in astronomical data science. His research focuses on fishing for exotic objects within vast oceans of astronomical data.

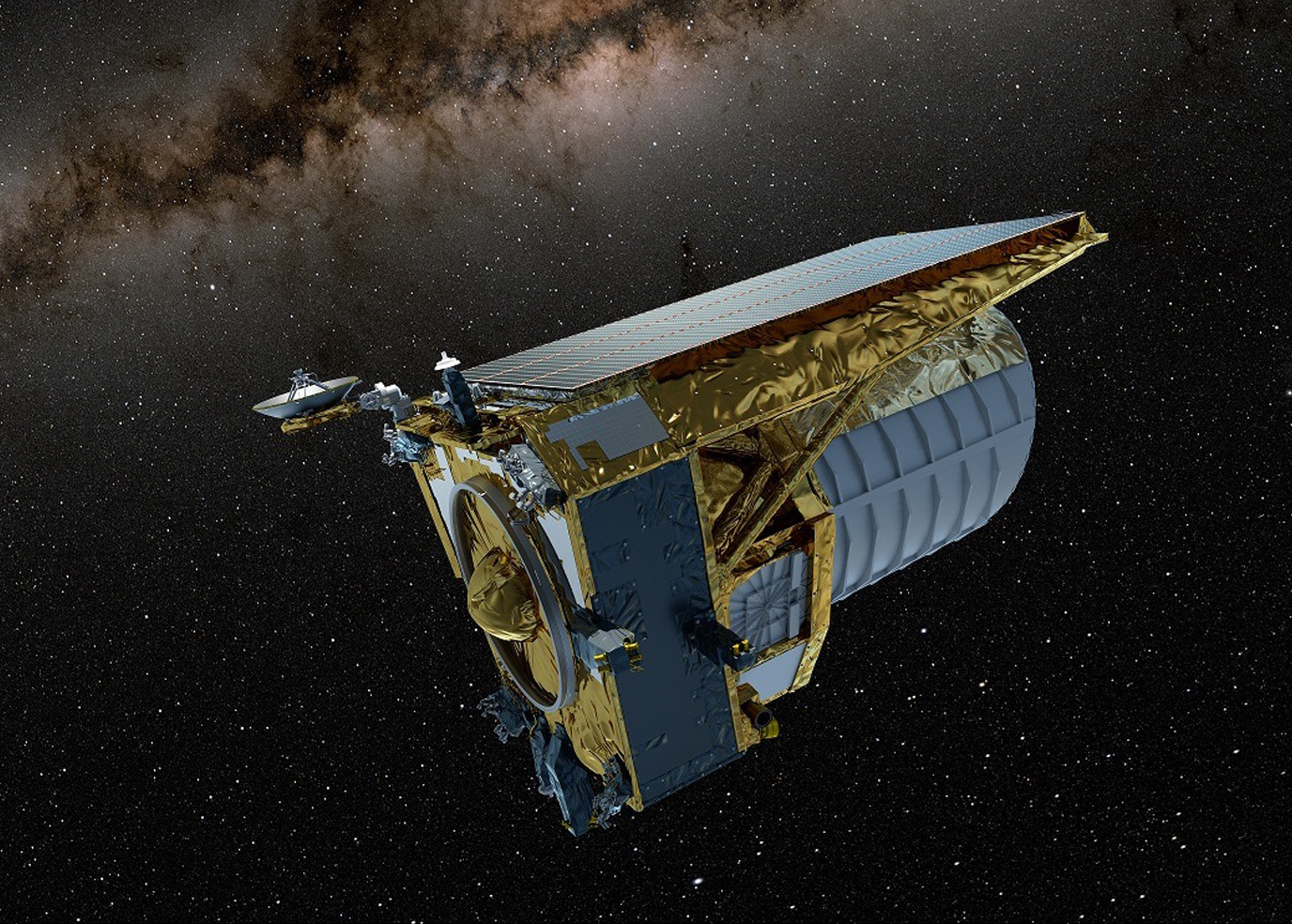

Rees Williams, a project manager at the UG Center for Information Technology (CIT) and OmegaCEN, has over 35 years of experience in processing and archiving data from satellite-mounted astronomical instruments. His expertise spans X-ray, gamma ray, and optical instruments, with his latest project involving the Euclid satellite.

It's not just the size that makes it big data, it's also the complexity.

What type of big data do you typically work with in your research?

Gijs: I typically work with imaging data. This involves handling raw data volumes ranging from a few hundred terabytes to petabytes. It's not just the size that makes it big data; it's also the complexity. The images require extensive analysis and processing to calibrate them for scientific use. After this, the information about the scientific analysis is stored in a database, creating a rich source of astronomical information.

Rees: It's not only about the volume but also the complexity and changeability of the data. For example, with projects like Euclid, the datasets are highly complex and often require frequent updates and recalibrations.

Gijs: Rees is right. For some projects, you can easily recreate your database when you find a better way. However, if you're dealing with terabytes of metadata accumulated over years, you can't just discard it. You must carefully retain past data while adding new insights, which demands high agility and flexibility from your database to accommodate its evolution.

What kind of databases are we talking about?

Gijs: We use relational database management systems.

Rees: Indeed, our database uses a classic relational structure, but it can behave like an object database depending on what we need. This dual functionality allows us to handle and query data more naturally, as astronomers often need to interact with data objects like stars or galaxies.

Maintaining a database accessible to anyone globally requires significant resources.

Are these databases open?

Rees: The final results are open, but the entire database system is usually not fully public due to funding limitations.

Gijs: I've been involved in projects where end results are exported for complete open access, such as with the European Southern Observatory. However, full open access to the database is only possible with sufficient funding to support such an open system. Maintaining a database accessible to anyone globally requires significant resources.

Rees: Drawing from my background with satellite databases, particularly European satellites, in the open databases that contain all the data, it’s the metadata that is selected. The European Space Agency (ESA) archive, for instance, has about 30 to 40 satellites. To manage them effectively, a standardized architecture is crucial. This approach allows for easier maintenance and ensures the longevity and accessibility of the data.

What are some of the main challenges you face when it comes to managing, archiving, and making these large data sets open?

Rees: The main challenge is making data accessible in a way that people can easily use it. For example, with Euclid, providing everyone access to the processing system would be almost impossible. Instead, we offer access through familiar interfaces like virtual observatories.

Gijs: Another challenge is ensuring that astronomers are trained in data science. Many are experts in astrophysics but not necessarily in using complex databases. This gap can make even open data difficult to utilize effectively.

Another important aspect of open data is metadata. In astronomy, metadata is standardized. Could you tell us how it became so successful?

The nature of our data, which is apolitical and free from privacy issues, aids in its standardization.

Rees: The standardization of data files, particularly using the Flexible Image Transport System (FITS) format, always seemed to me to be somewhat accidental but became universally adopted. However, while public archives consistently follow these standards, internal processing systems often do not, requiring conversion to the standard format. Similarly, metadata is standardized according to virtual observatory rules, but again, this applies only to public archives, not processing systems.

Gijs: Astronomers have done well in adopting standards, partly because our community is relatively small and internationally collaborative. The nature of our data, which is apolitical and free from privacy issues, also aids in this standardization.

Rees: I think it's important to note that there are only a limited number of common data types, including images, spectra, data cubes, and time series. However, when dealing with non-standard data types like gamma-ray experiments, the data becomes increasingly difficult to work with. For example, COMPTEL's raw data is a five-dimensional data cube: one of them is energy, and none of the rest allows a photon to be associated with a position in the sky. So while the data is findable, it is no longer usable, which does not adhere to the FAIR data principles.

Hardware is cheap, but people are expensive.

What are some lessons you have learned from handling and storing big data?

Gijs: One key lesson is the importance of data lineage, "snail trails" enabling to trace every piece of data back to its origin. For instance, if you have a galaxy morphology result, you need to know how accurately it was measured. If its error bar is suspect, you might spend days tracking down the true source of error. Ideally, the database should provide detailed documentation on how every result was derived, allowing users to follow each step back to the original data. Building such a comprehensive and transparent database is a complex, challenging, but ultimately rewarding task that requires significant time and collaboration.

Rees: Another lesson is that hardware is cheap, but people are expensive. Projects often suffer from trying to use existing, heterogeneous computing resources, which increases complexity and cost. It's better to invest in uniform, efficient hardware solutions, although this is politically difficult,

How do you ensure the quality and reliability of the data you collect and analyze?

Rees: For our current project, the key challenge is ensuring data quality. This requires significant effort and an assessment system. When data is produced, it must be evaluated for quality. If issues are found, traceability is crucial to identify and address the root cause. This process is iterative; quality flags alone are not enough.

Gijs: Ensuring data quality involves both detecting and diagnosing quality issues. Detecting issues is often straightforward, such as recognizing when astronomical data doesn't match expected astrophysical patterns. However, diagnosing the source of these issues is more complex and requires traceability, as Rees mentioned. For instance, calibration data might be affected by unrecognized events like a solar storm, leading to incorrect conclusions. Misinterpreted signals can result from overlooked factors, such as dust in the Milky Way or errors in satellite orbit corrections. Effective traceability allows for identifying and correcting these errors to ensure reliable results.

Combining large language models with our current data infrastructure could revolutionize how we conduct astronomical research.

What advancements or developments do you anticipate in the field of big data management and sharing in astronomy?

Gijs: I dream of a future where we can interact directly with data processing systems using natural language. Imagine asking a program to analyze star variability in a specific area, having it execute the task, and then reviewing and refining the results interactively. Combining large language models with our current data infrastructure could revolutionize how we conduct astronomical research.

Rees: I see the need for processing data close to its archive to improve efficiency. This shift requires changes in funding models to support international cooperation and resource sharing. Systems like ESA's data labs are a step in the right direction, but we need to go much further. We might adopt a model similar to supercomputing centers such as SURF, where researchers apply for processing time directly at the data archive.

More news

-

15 April 2025

How to deal with the datafication of our society?

-

05 March 2025

Women in Science