A human should decide when it comes to matters of life or death

From medical diagnoses to autonomous weapons in the Middle East: artificial intelligence (AI) is making more and more decisions on its own without a human involved. Rineke Verbrugge, Professor of Logic and Cognition at the University of Groningen, believes that has to change: AI should be an addition to human intelligence, not a replacement. She is the co-founder of the Hybrid Intelligence Centre which has existed for five years now and recently received the green light for another five years.

FSE Science Newsroom | Text Charlotte Vlek | Images Leoni von Ristok

Computers can recognize patterns at lightning speed, analyse large amounts of data, and they can help us remain objective, but to preserve human norms and values, a human always needs to be involved, Verbrugge believes. ‘Consider medical diagnoses: AI is really good at diagnostics, for example by analysing MRI scans, but when it comes to matters of life or death a human should make the final decision.’

The Hybrid Intelligence Centre’s mission, therefore, is to design AI systems that can function as an extension of our human mind. That means that the system does not simply spit out a final verdict — a medical diagnosis or the shortest route home — but that it can work together with and adjust to people, make responsible choices, and explain why it makes these choices. A doctor could then reach a diagnosis together with the help of an AI algorithm, based on data and conversations with the patient.

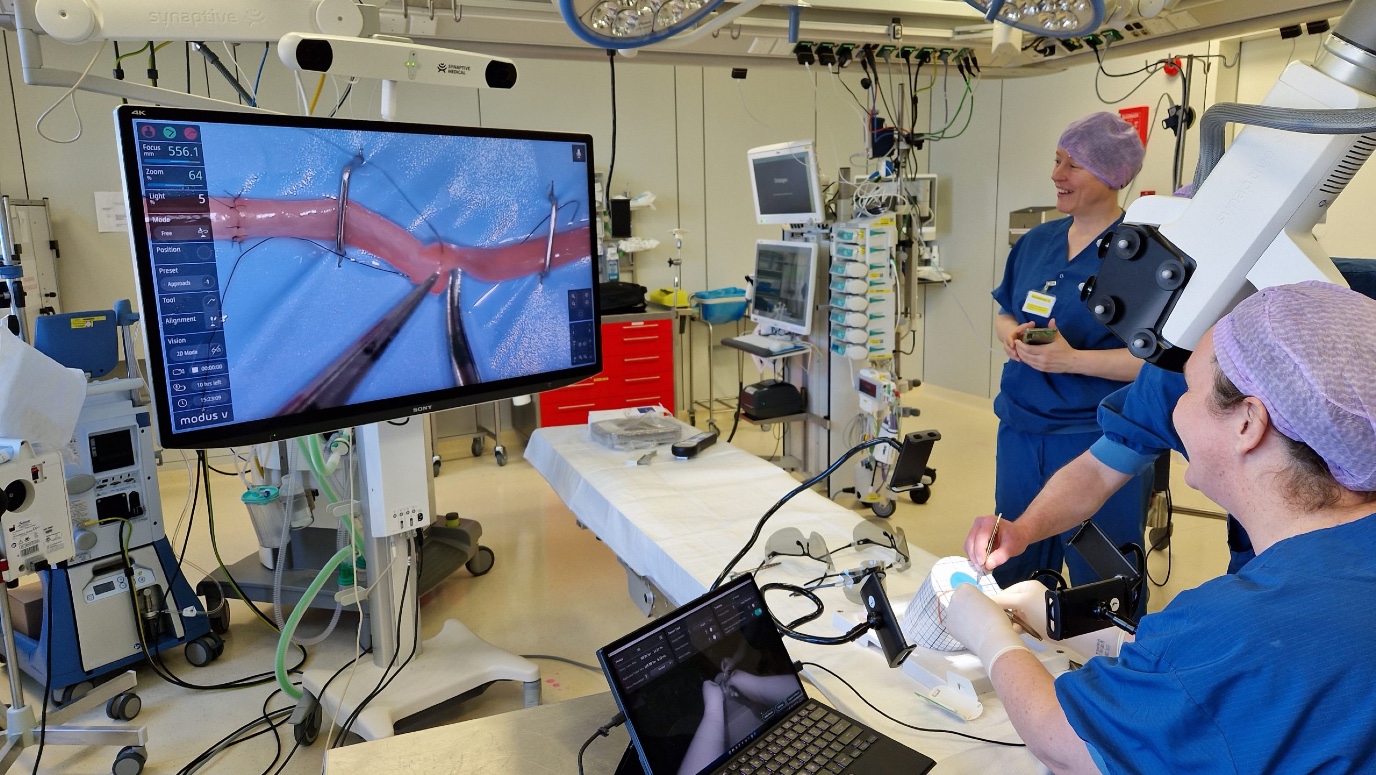

A cooperative robotic arm

The research of the Hybrid Intelligence Centre always focuses on specific applications, Verbrugge says. ‘We have been working on this for five years now, and in another five years, we hope to have developed those applications to the extent that someone can bring them to market.’ The applications range from a robot attendant who can answer visitors’ questions in a museum to a robotic arm that can assist a surgeon during the operation.

In another five years, we hope to have developed those applications to the extent that someone can bring them to market.

‘This particular robotic arm has a camera with which it films close-up footage that the surgeon can see on a screen. In this scenario, it is then handy if the robotic arm anticipates what the surgeon is going to do, or if it briefly consults them: “Would you like me to take a look from the other side next?”’ Such a robotic arm could also estimate when a surgeon starts to become tired and could then advise him: maybe it is time someone else takes your place. ‘But a surgeon would never listen to that of course!’ Verbrugge laughs, That is why it is important that hybrid intelligence also understands how people are wired.

What someone else thinks you think

Computers are like idiots savants: they are incredibly good at only a narrow domain. However, they lack the skill to understand people’s motivations, something a four-year-old child can already do: theory of mind, being able to reason about the thoughts, intentions, and beliefs of others. Verbrugge has been researching this topic for almost twenty years.

The Sally-Anne task

The standard test to research theory of mind in children consists of a short story about Sally and Anne. Sally puts a marble in her basket and leaves the room. When she is gone, Anne takes the marble out of the basket and puts it in a box. Then Sally comes back into the room. A young test subject will now be asked: ‘Where does Sally think her marble is hidden?’

A child who has not developed theory of mind yet will reply that Sally will look for her marble in the box, because the child knows that the marble is indeed in there. They do not understand yet that Sally does not know this. A child with so-called first-order theory of mind will say that Sally is going to look in her basket because that is where she put the marble before leaving the room.

This exercise becomes a bit more difficult (second-order theory of mind) when the question is not about what Sally thinks but about what Sally thinks that Anne thinks. For example: where does Sally think that Anne thinks the marble is? Verbrugge explains that theory of mind is very domain-specific. A short story test may, for example, succeed in the second order, but a game of reasoning about what the opponent thinks that you think they are going to do, is still a bridge too far.

A robot in a team of humans

Verbrugge investigates theory of mind from a unique combination of logic and cognitive science. Together with computer scientists, psychologists, and linguists, Verbrugge finds out how people apply theory of mind and where they go wrong. The goal: by understanding how humans acquire this skill, you could also teach it to a computer. Simply programming the computer to do it will not work, Verbrugge explains. ‘You could programme a computer to reason about a human being if they were to be perfectly logical, but we, as humans, are not perfect. Far from it, actually.’

You could programme a computer to reason about a human being if they were to be perfectly logical, but we, as humans, are not perfect. Far from it, actually.

People do not always want to work together, for example, or do not always have good intentions. This is especially complicated for AI if it has to learn to work together in a mixed team of people. For example, because one person is eager to get promoted, another does not want to work with them. ‘An AI that understands this is better able to work with these people.’

This is why Verbrugge’s PhD students are investigating, for example, how so-called common ground can emerge within a group of people: the written and unwritten rules that everyone is aware of. These could be about the behaviour of participants in a game, cyclists at a traffic light where all directions turn green at the same time, or about privacy: when is it socially acceptable to share a photograph of a friend on Facebook? And how do you actually know when a person is lying; can you teach that to a small child as well? And to a computer?

The next generation of AI researchers

Verbrugge finds it important to also reach young AI researchers outside her research group with the Hybrid Intelligence Centre's vision. As Director of Training and Education, she organizes biweekly gatherings about Hybrid Intelligence for PhD students of the research centre and courses that are also open to PhD students from outside. The University of Groningen will soon even be the first university in the world to have a Hybrid Intelligence Master’s track within the AI Master’s degree programme. Verbrugge: ‘For students that love programming but also with a focus on people.’

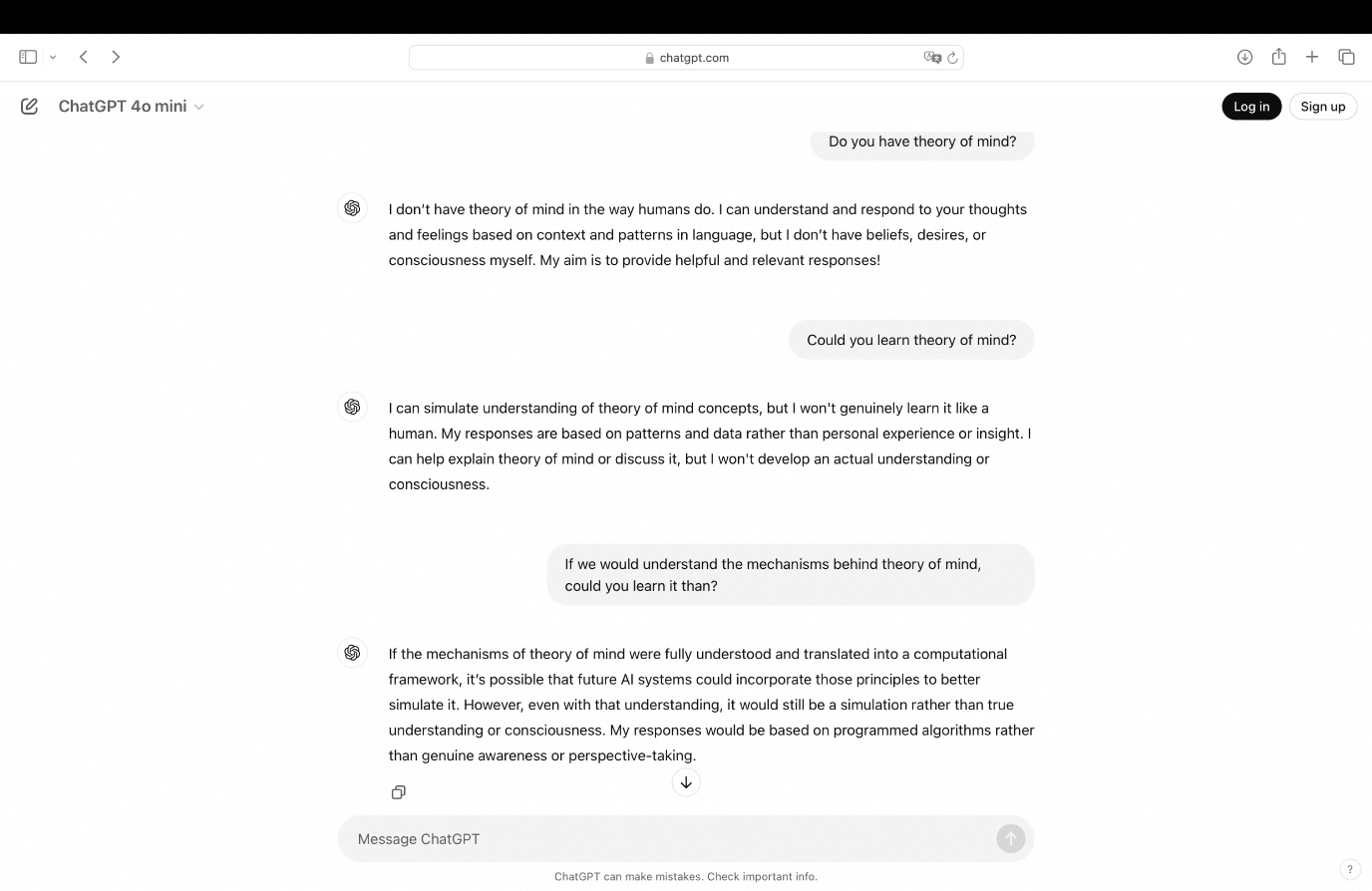

Does ChatGPT have theory of mind?

‘Theory of mind is currently a bit of a buzzword within AI,’ Verbrugge says. For example, is a huge language model such as ChatGPT capable of developing theory of mind? To test this, researchers presented a story test (see box: Sally-Anne task) to such a chat model. The result? The language model got the right answer! ‘But,’ Verbrugge says, ‘there are of course countless examples of these story tests on the internet, from which the language model may have gotten the answer.’

Harmen de Weerd, assistant professor in Verbrugge's group, explains: ‘With a slight variation in the story, the language model already fails. If Anne puts Sally’s marble in a transparent box, for example, the AI still thinks that Sally does not know her marble was moved. Whereas a child can understand that Sally can easily see the marble lying in the transparent box.’ So ChatGPT is still a toddler, in that regard.

More news

-

19 December 2025

Mariano Méndez receives Argentine RAÍCES award

-

18 December 2025

Why innovate, and for whom?

-

17 December 2025

Ben Feringa wins Feynman Prize